Run Jupyter on cluster

Jupyter jobs can be sent to any partition associate with your slurm account/association. To access jupyter, users need to forward port and access from localhost.

In this tutorial, we will create a Python environment as below: - Python 3.7 - Tensorflow-GPU 2.2 - Jupyter - CUDA 10.1 - cuDNN 7

- Login to Frontend node, then create a Python environment

module load Anaconda3 conda create -n jupyter python=3 source activate jupyter - Install

Tenserflow-gpu, Jupyterpip install jupyter tensorflow-gpu - Test Jupyter enviroment using

sinteractive[songpon@ist-frontend-001 ~]$ sinteractive -p gpu-cluster --gpus 1 [songpon@ist-gpu-02 ~]$ module load Anaconda3 CUDA/10.1 cuDNN/7 [songpon@ist-gpu-02 ~]$ source activate jupyter (jupyter) [songpon@ist-gpu-02 ~]$ python Python 3.7.7 (default, May 7 2020, 21:25:33) [GCC 7.3.0] :: Anaconda, Inc. on linux Type "help", "copyright", "credits" or "license" for more information. >>> import tensorflow as tf >>> tf.test.is_gpu_available(cuda_only=False, min_cuda_compute_capability=None) WARNING:tensorflow:From <stdin>:1: is_gpu_available (from tensorflow.python.framework.test_util) is deprecated and will be removed in a future version. Instructions for updating: Use `tf.config.list_physical_devices('GPU')` instead. 2020-07-21 00:58:09.333280: I tensorflow/core/platform/cpu_feature_guard.cc:143] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 AVX512F FMA 2020-07-21 00:58:09.340433: I tensorflow/core/platform/profile_utils/cpu_utils.cc:102] CPU Frequency: 2500000000 Hz 2020-07-21 00:58:09.340613: I tensorflow/compiler/xla/service/service.cc:168] XLA service 0x55f38d0083c0 initialized for platform Host (this does not guarantee that XLA will be used). Devices: 2020-07-21 00:58:09.340657: I tensorflow/compiler/xla/service/service.cc:176] StreamExecutor device (0): Host, Default Version 2020-07-21 00:58:09.351457: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcuda.so.1 2020-07-21 00:58:10.250677: I tensorflow/compiler/xla/service/service.cc:168] XLA service 0x55f38d0d02e0 initialized for platform CUDA (this does not guarantee that XLA will be used). Devices: 2020-07-21 00:58:10.250811: I tensorflow/compiler/xla/service/service.cc:176] StreamExecutor device (0): Tesla V100-SXM2-32GB, Compute Capability 7.0 2020-07-21 00:58:10.252327: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1561] Found device 0 with properties: pciBusID: 0000:18:00.0 name: Tesla V100-SXM2-32GB computeCapability: 7.0 coreClock: 1.53GHz coreCount: 80 deviceMemorySize: 31.75GiB deviceMemoryBandwidth: 836.37GiB/s 2020-07-21 00:58:10.307168: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcudart.so.10.1 2020-07-21 00:58:10.546111: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcublas.so.10 2020-07-21 00:58:10.645813: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcufft.so.10 2020-07-21 00:58:10.766945: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcurand.so.10 2020-07-21 00:58:10.909644: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcusolver.so.10 2020-07-21 00:58:11.021832: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcusparse.so.10 2020-07-21 00:58:11.196910: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcudnn.so.7 2020-07-21 00:58:11.199688: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1703] Adding visible gpu devices: 0 2020-07-21 00:58:11.199789: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library libcudart.so.10.1 2020-07-21 00:58:11.201419: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1102] Device interconnect StreamExecutor with strength 1 edge matrix: 2020-07-21 00:58:11.201495: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1108] 0 2020-07-21 00:58:11.201553: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1121] 0: N 2020-07-21 00:58:11.204223: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1247] Created TensorFlow device (/device:GPU:0 with 30262 MB memory) -> physical GPU (device: 0, name: Tesla V100-SXM2-32GB, pci bus id: 0000:18:00.0, compute capability: 7.0) True - create file jupyter.sub DO NOT FORGET TO CONFIGURE SLURM ARGUMENTS

#!/bin/bash -l #SBATCH --job-name=jupyter #SBATCH --output=out #SBATCH --nodes=1 #SBATCH --partition=gpu-cluster #SBATCH --account=scads #SBATCH --gres=gpu:1 #SBATCH --time=0:30:0 module load Anaconda3 module load CUDA/10.1 module load cuDNN/7 port=$(shuf -i 6000-9999 -n 1) USER=$(whoami) node=$(hostname -s) cat<<EOF Jupyter server is running on: $(hostname) Job starts at: $(date) Step 1 : - Open a terminal, run command ssh -L 8888t:$node:$port $USER@10.204.100.209 -i ~/.ssh/vistec_id_rsa Step 2: Keep the terminal windows in the previouse step open. Now open browser, find the line with The Jupyter Notebook is running at: $(hostname) the URL is something: http://localhost:8888/?token=XXXXXXXX (see your token below) you should be able to connect to jupyter notebook running remotly on galaxy compute node with above url --------------------------------------------------------------------------------------------------------- EOF unset XDG_RUNTIME_DIR if [ "$SLURM_JOBTMP" != "" ]; then export XDG_RUNTIME_DIR=$SLURM_JOBTMP fi source activate jupyter jupyter notebook --no-browser --port $port --notebook-dir=$(pwd) --ip=$node -

Submit jobs to Slurm squeue

sbatch jupyter.sub - cat output

[songpon@ist-frontend-001 jupyter]$ cat out Jupyter server is running on: ist-dgx04 Job starts at: Mon Jul 20 07:22:36 +07 2020 Step 1 : - Open a terminal and run this command. ssh -L 8888:ist-dgx04:7432 songpon@10.204.100.209 -i ~/.ssh/vistec_id_rsa Step 2: Keep the terminal windows in the previouse step open. Now open browser, find the line with The Jupyter Notebook is running at: ist-dgx04 the URL is something: http://localhost:8888/?token=XXXXXXXX (see your token below) you should be able to connect to jupyter notebook running remotly on galaxy compute node with above url --------------------------------------------------------------------------------------------------------- [I 07:22:36.984 NotebookApp] Serving notebooks from local directory: /ist/users/songpon/playground/jupyter [I 07:22:36.984 NotebookApp] The Jupyter Notebook is running at: [I 07:22:36.984 NotebookApp] http://ist-dgx04:7432/?token=394000d3201aceb433e1b3c30eec633401c51506e36789bc [I 07:22:36.984 NotebookApp] or http://127.0.0.1:7432/?token=394000d3201aceb433e1b3c30eec633401c51506e36789bc [I 07:22:36.984 NotebookApp] Use Control-C to stop this server and shut down all kernels (twice to skip confirmation). [C 07:22:36.988 NotebookApp] To access the notebook, open this file in a browser: file:///ist/users/songpon/.local/share/jupyter/runtime/nbserver-20608-open.html Or copy and paste one of these URLs: http://ist-dgx04:7432/?token=394000d3201aceb433e1b3c30eec633401c51506e36789bc or http://127.0.0.1:7432/?token=394000d3201aceb433e1b3c30eec633401c51506e36789bc - On your local machine run command following the output file. Do not close this terminal

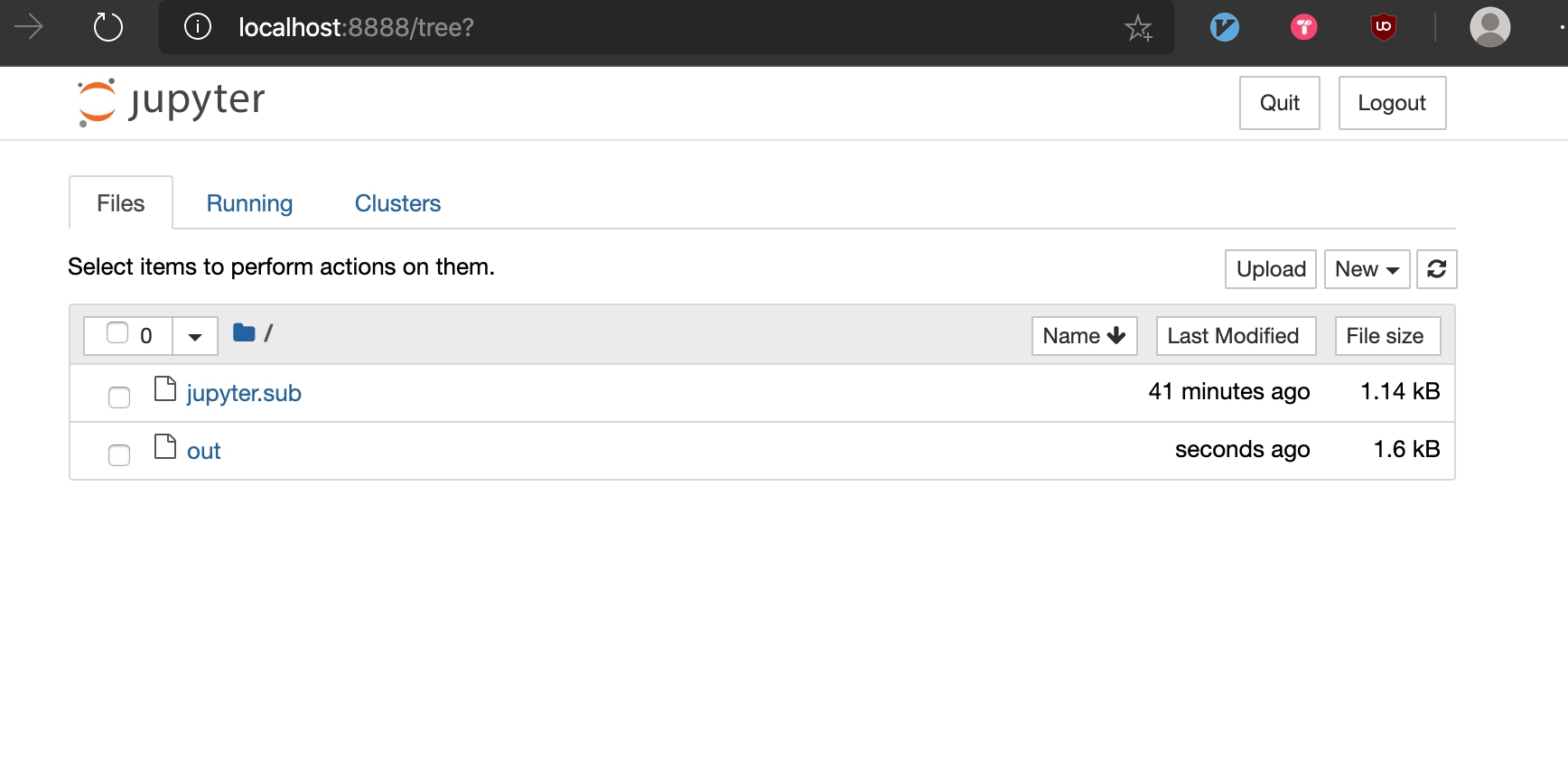

ssh -L 8888:ist-dgx04:7432 songpon@10.204.100.209 -i ~/.ssh/vistec_id_rsa - Access Jupyter from your web browser:

localhost:8888